Publish Date: 01/09/2025

Your board just asked a simple question: "We have spent $3M on AI this year. What is the ROI?"

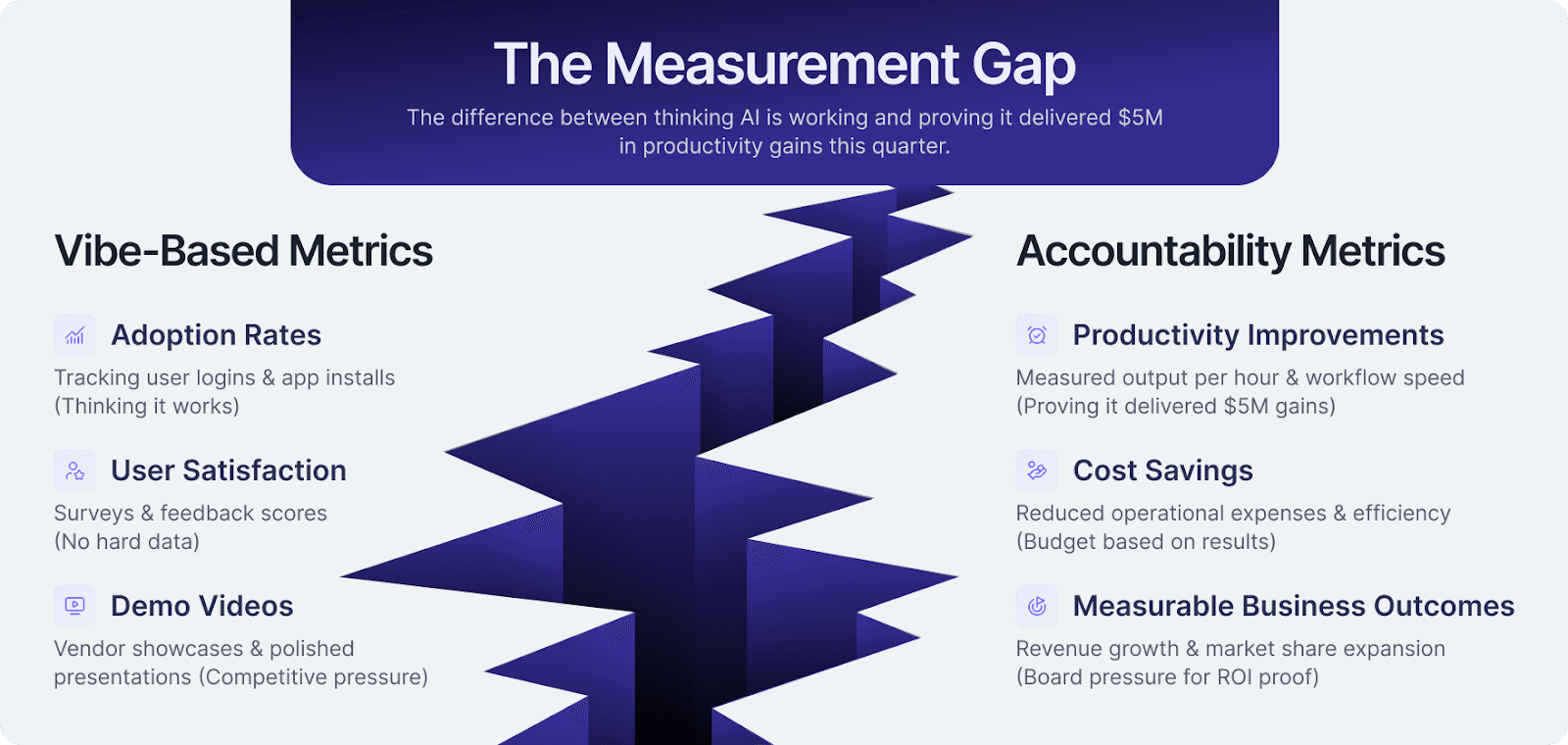

You can show adoption rates, user satisfaction scores, and impressive demos. But actual productivity gains? Measurable business value? Quantifiable outcomes? You are answering with anecdotes and assumptions.

Key Takeaway

Enterprise AI investments will reach$644 billion in 2025 according to Gartner, yet 72% are destroying value through waste according to the Larridin State of Enterprise AI 2025 Report. The accountability problem: Organizations track AI adoption, but almost none measure actual productivity improvements or business value generation. That gap represents the difference between thinking AI is working and proving it delivered measurable return on investment. The measurement imperative: The next phase of AI transformation is accountability. Executives who can prove productivity gains, demonstrate measurable AI ROI, and show clear value realization will secure continued investment, while also accelerating their career growth. Those stuck with vibe-based metrics face budget cuts and credibility losses.

Key Terms

-

AI ROI Measurement: Quantifying business value generated from AI investments through productivity improvements, cost savings, and outcome achievement. Goes beyond adoption metrics to measure actual financial returns.

-

Vibe-Based AI Spending: Investment decisions driven by vendor demonstrations, competitive pressure, and executive enthusiasm without measurable outcomes or accountability frameworks. Characterized by adoption metrics replacing outcome metrics.

-

Productivity Measurement: Quantification of efficiency gains, time savings, output increases, and quality improvements attributable to using AI. Essential for proving business value rather than just tool access.

-

Business Value Framework: Structured approach connecting AI spending to measurable business outcomes including financial metrics, productivity gains, strategic objectives, and competitive advantages.

-

Accountability Era: Current phase of AI transformation where every AI dollar must demonstrate return. Characterized by board pressure for ROI proof and budget allocation based on measured results rather than assumptions.

The accountability problem: The era of vibe-based AI spending is over. Enterprises invested billions based on vendor promises and competitive pressure. Now executives demand proof. How much more productive is AI making your company? The biggest AI risk is not security breaches; it is spending millions without measurable ROI.

A recent MIT study found that 95% of enterprise AI initiatives fail to deliver measurable return on investment. Despite $30 to $40 billion in enterprise investment, most organizations see zero return from their AI projects. Why? They are measuring the wrong things.

The measurement gap: Most organizations track AI adoption and usage. Almost none measure actual productivity improvements or business value generation. That gap represents the difference between thinking AI is working and proving it delivered $5M in productivity gains this quarter.

This guide covers the essential AI ROI framework that measures real productivity, proves business value, and transforms vibe-based spending into accountable investment. This is AI measurement for CFOs and business leaders entering the accountability era where every AI dollar must demonstrate return.

Why Vibe-Based AI Spending Creates Unacceptable Waste

What Vibe-Based AI Investment Looks Like

Decisions driven by vendor demonstrations and marketing materials. Competitive pressure replacing strategic analysis. Executive enthusiasm without measurement frameworks. Adoption metrics replacing outcome metrics. The mantra: Trust us, it is working, without quantifiable proof.

According to research from Wharton, 72% of business leaders say they now have structured processes for measuring AI ROI using key metrics including employee productivity, profitability, and operational efficiency. Yet Gartner reports that nearly half of business leaders say proving generative AI business value remains the single biggest hurdle to AI adoption.

The disconnect reveals the problem. Organizations implement measurement frameworks, but often measure the wrong things. S&P Global data shows the share of companies abandoning most of their AI projects jumped to 42% in 2025 from just 17% the year prior, citing total cost and unclear value as top reasons.

What Vibe-Based Spending Hides

-

Actual productivity improvements or lack thereof

-

Real business value generated per dollar spent on AI implementation

-

Which use cases and AI tools drive ROI versus waste money

-

Proficiency gaps preventing value realization from AI systems

-

Investment efficiency and optimization opportunities across AI technologies

The Accountability Gap in Numbers

Organizations track AI adoption. 60–70% of employees use AI tools, but they can’t answer: How much more productive are those users? You also need to ask other questions:

-

How much are they using the AI tools?

-

What are they using the AI tools to do?

-

How well are they using the AI tools?

-

How much more productive are those users?

Investment decisions based on assumptions, not measured business outcomes.

Example: A $2M AI investment generates impressive usage statistics, but zero quantified productivity gains or business value. This is a critical gap between AI implementation and measurable financial impact.

The problem extends beyond measurement capability. Each AI platform defines metrics differently. One vendor defines active users as monthly logins. Another counts weekly engagement. A third measures API calls. Finance teams receive incompatible data sets that are impossible to consolidate or use for benchmarks. And AI embedded within existing SaaS solutions has virtually no metrics at all while you pay more for that functionality.

Why CFOs and CIOs Need ROI Measurement Frameworks

CFO Accountability Priorities

KPMG research shows investor pressure for demonstrating ROI on generative AI investments has intensified dramatically. For 90% of organizations, investor pressure is considered important or very important for demonstrating ROI in Q1 2025, a sharp increase from 68% in Q4 2024. Stakeholders demand proof of financial returns.

CFOs need to prove AI ROI to the board and shareholders, connect AI spending to measurable business outcomes, optimize investment based on value generation data, forecast returns and justify continued investment, and identify waste before it costs millions in budget.

Boards face D&O liability from inadequate AI oversight and governance failures. Insurers will demand complete AI inventories, usage monitoring, and audit trails. Without continuous documentation of shadow AI and governance controls, companies risk claims and policy rescission.

CIO Strategic Imperatives

CIOs need to connect AI spending to business outcomes that CFOs value, optimize technology portfolio and vendor relationships based on measurable performance, forecast capacity needs and infrastructure costs accurately, report progress to board with confidence using data, and defend technology budgets with proof of value creation.

The Measurement Problem Nobody Talks About

There are no standardized AI ROI metrics. Every vendor uses different definitions. Active user means different things across platforms. Productivity improvement calculations vary wildly. Cost per outcome is not defined. Organizations cannot benchmark against competitors or best practices because comparable data does not exist.

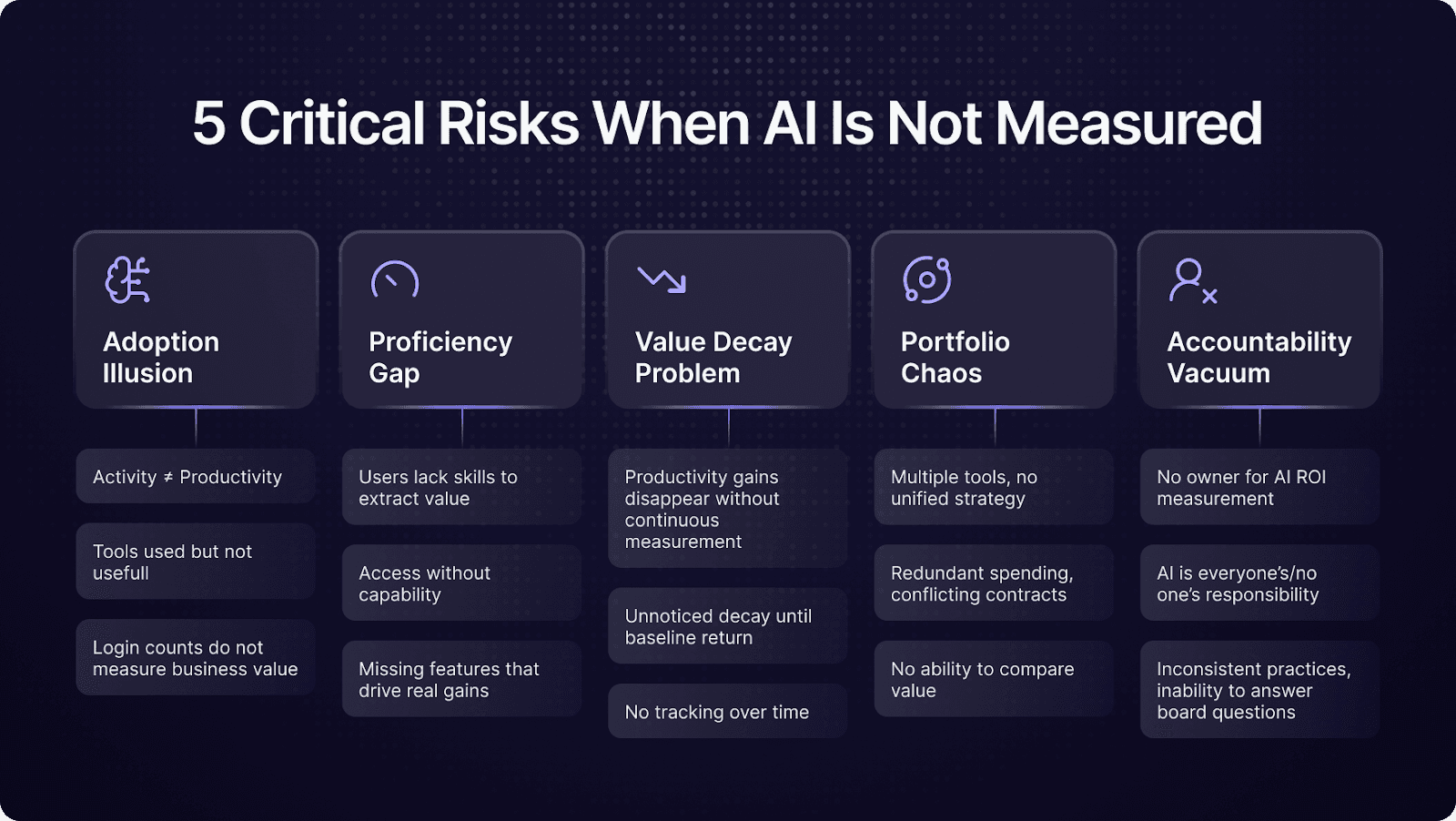

The Five Pillars of AI ROI Risk

There are five critical risk areas when AI investments lack proper measurement frameworks. Each pillar represents a failure mode that destroys value and wastes budget. Understanding these risks drives the business case for comprehensive ROI measurement.

Pillar 1: The Adoption Illusion

High usage rates mask zero productivity improvement. Organizations celebrate 70% adoption without measuring whether those users accomplish more work, complete tasks faster, or generate better outcomes. The adoption metric becomes the goal instead of the means.

Example scenario: Company deploys AI writing assistant, and shows 5,000 active users monthly. Finance asks: How much more productive are those users? Answer: We do not know. The measurement stopped at adoption. The business value question remains unanswered.

Why adoption alone fails: Tools may be used but not useful, users may experiment without meaningful integration, activity does not equal productivity, login counts do not measure business outcomes, and satisfaction surveys do not prove value generation.

Pillar 2: The Proficiency Gap

AI tools are available, but users lack skills to extract value. Organizations provide access without ensuring capability. The proficiency gap prevents value realization even when adoption appears strong.

Example scenario: The sales team gets an AI research tool, and adoption is 80%. But users only scratch the surface, missing advanced features that drive real productivity gains. The tool can save hours per deal. Users save minutes because they do not know what it can do.

Proficiency measurement reveals: Skill levels across teams and roles, training effectiveness and gaps, feature utilization depth, productivity correlation with expertise, and where investment in enablement pays off.

Pillar 3: The Value Decay Problem

Initial productivity gains disappear without measurement. Organizations see early wins that fade as novelty wears off, processes drift, skills atrophy, integration breaks, or users revert to old habits. Without continuous measurement, value erosion goes unnoticed until productivity returns to baseline.

The measurement solution: Track productivity over time beyond pilot phase, identify value decay before it becomes critical, correlate proficiency maintenance with sustained gains, justify continued investment in training and optimization, and prove AI delivers lasting competitive advantage.

Pillar 4: The Portfolio Chaos

Multiple AI tools with no unified measurement strategy. Every department selects their own solutions. IT loses visibility. Finance cannot consolidate spending or compare value across investments. The AI portfolio becomes a collection of unmanaged experiments.

Organizations discover: Three customer service AI tools, five different coding assistants, seven writing tools across departments, conflicting vendor contracts and redundant spending, and zero ability to answer which investments work best.

Portfolio-level measurement enables: Unified ROI dashboard across all AI investments, vendor performance comparison using consistent metrics, identification of redundant tools and consolidation opportunities, data-driven budget allocation to highest-value solutions, and strategic decisions about build versus buy versus partner.

Pillar 5: The Accountability Vacuum

No owner for AI ROI measurement and reporting. Without accountability, measurement never happens systematically. AI becomes everyone's responsibility and therefore no one's responsibility.

The accountability problem manifests as: Inconsistent or absent measurement practices, inability to answer board questions about ROI, conflicting claims about AI value across departments, no process for tracking and optimizing investment, and continued spending despite unclear returns.

Measurement frameworks establish accountability by assigning ownership to specific roles, defining clear reporting cadences and formats, creating escalation paths when ROI falls short, connecting measurement to budget allocation authority, and making AI value transparent to all stakeholders.

Building Your AI ROI Measurement Framework

Comprehensive AI ROI measurement requires a framework that combines quantitative metrics, qualitative outcomes, and continuous monitoring. This framework proves value to stakeholders, optimizes investment allocation, and guides strategic decisions about AI portfolio.

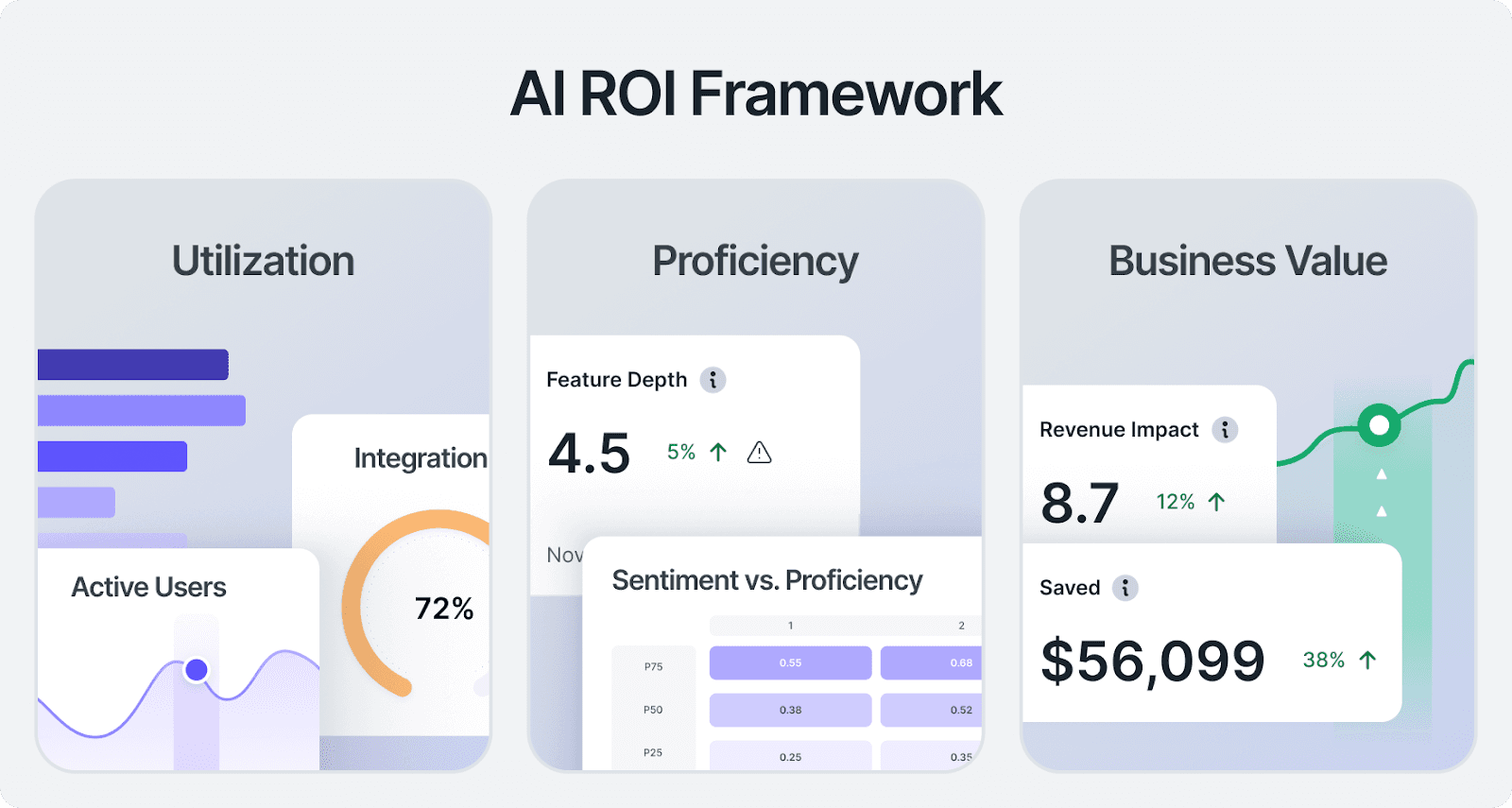

Framework Architecture: Three Core Components

Effective AI ROI measurement integrates three interdependent components. Each addresses different stakeholder needs and together, they provide a unified view of AI value creation.

Component 1: Utilization Measurement

Track who uses AI, how frequently, and which features drive engagement. Utilization provides the foundation for understanding adoption patterns and identifying optimization opportunities.

Key utilization metrics include: Active users by role, function, and seniority, usage frequency and consistency patterns, feature adoption and depth of integration, workflow integration completeness, and adoption trajectory over time.

Utilization alone does not prove ROI, but it provides data: Identification of under-adopted tools requiring intervention, proficiency correlation with usage patterns, targeted training for low-engagement segments, and baseline for measuring productivity improvements.

Component 2: Proficiency Measurement

Assess capability to extract value from AI tools. Proficiency measurement reveals skill gaps preventing value realization and guides enablement investment.

Proficiency measurement approaches: Skills assessments and capability benchmarking, feature utilization depth analysis, productivity correlation with expertise levels, self-reported competency tracking, and outcome quality comparison across skill levels.

Proficiency measurement drives ROI by: Identifying training needs before they impact productivity, correlating skill development with business outcomes, optimizing enablement investment for maximum return, proving the business case for change management, and accelerating time-to-value for new AI investments.

Component 3: Business Value Measurement

Quantify actual business outcomes attributable to AI usage. Business value measurement connects AI spending to financial returns, productivity gains, and strategic objectives.

Business value metrics vary by use case but typically include: Time savings quantified in hours and dollar value, quality improvements measured through output assessment, cost reductions from automation and efficiency, revenue impact from enhanced productivity, and strategic value including competitive advantage.

Establishing Baseline Metrics

ROI measurement requires baseline comparison. Organizations must measure productivity before deploying AI to be able to prove improvement after implementation. Without baseline metrics, all ROI claims remain anecdotal.

Baseline measurement approach: Document current state performance metrics, identify key workflows AI will impact, measure time requirements for standard tasks, assess quality levels and error rates, and calculate current costs and resource allocation.

Baseline enables: Accurate before-and-after productivity comparison, ROI calculation grounded in measurable improvement, credible reporting to stakeholders with data proof, identification of areas with highest improvement potential, and validation that AI delivers promised business value.

Calculating AI ROI: The Formula

AI ROI calculation requires quantifying both value generated and total investment cost. This formula provides the framework for financial accountability.

Value Generated = productivity gains measured in time savings x fully-loaded hourly cost + cost reductions from automation and efficiency + revenue increases attributable to AI usage + quality improvements quantified in dollar terms

Total Investment includes licensing and subscription costs, implementation and integration expenses, training and change management investment, ongoing support and maintenance costs, and infrastructure and technical debt.

ROI Percentage = (Value Generated - Total Investment) / Total Investment x 100

Example calculation:

-

Sales team of 50 using AI research tool.

-

Average time saved: 3 hours per week per person.

-

Fully-loaded cost: $75 per hour.

-

Annual productivity value: 50 people times 3 hours times 52 weeks times $75 equals $585,000.

-

Tool cost including implementation and training: $150,000 annually.

ROI: (585,000- $150,000) / $150,000 x 100 = 290%

Measurement Cadence and Reporting

AI ROI measurement requires regular cadence with defined reporting structure. One-time measurement provides a snapshot. Continuous measurement enables optimization and proves sustained value.

Recommended cadence: Weekly utilization and engagement tracking, monthly proficiency assessment and skills gaps, quarterly business value calculation and ROI reporting, and annual portfolio review and strategic planning.

Reporting structure should include: Executive dashboard with key ROI metrics, departmental scorecards showing team-level performance, use case analysis comparing AI tools and applications, trend analysis tracking improvement over time, and action plans addressing measurement insights.

Common AI ROI Measurement Mistakes

Organizations implementing AI ROI measurement frequently make predictable mistakes that undermine framework effectiveness. Avoiding these pitfalls accelerates the path to accurate measurement and accountability.

Mistake 1: Measuring Adoption Instead of Outcomes

The most common mistake: Organizations track who uses AI, but not what users accomplish. Active user counts become the success metric. Boards ask about ROI. Teams show adoption dashboards. The disconnect persists.

Why this fails: High adoption does not guarantee productivity improvement. Users may engage superficially without workflow integration. Tools may be used incorrectly, limiting value generation. Satisfaction does not equal business value. And adoption metrics allow continued spending without proof of return.

The solution: Maintain adoption metrics for monitoring, but prioritize outcome measurement. Track time savings, quality improvements, and cost reductions. Connect usage patterns to business value generation. Report ROI alongside adoption for complete picture. And make outcome metrics a primary driver of investment decisions.

Mistake 2: Accepting Vendor-Provided Metrics

Vendor dashboards show impressive numbers. Users love the tool. Productivity metrics look great. But vendor-defined metrics often inflate success and obscure actual business value.

The vendor metric problem: Definitions favor their product performance. Comparisons lack industry standardization. Important context gets omitted from reporting. Negative indicators remain hidden or minimized. And methodology lacks independent verification.

The solution: Define your own success criteria aligned with business goals. Implement independent measurement alongside vendor tools. Require transparent methodology for any vendor claims. Validate vendor metrics against ground truth data. And build internal measurement capability rather than dependency. Consider engaging third-party measurement specialists who can provide unbiased validation of AI performance claims.

Mistake 3: Failing to Measure Proficiency

Organizations assume tool access equals value creation. They measure who has licenses, not who possesses skills to use them effectively. The proficiency gap destroys potential ROI.

Proficiency measurement reveals: Users with access but insufficient training, advanced features going unused across organization, productivity gains concentrated in expert users only, training investment needs and expected ROI, and correlation between skill development and business outcomes.

Proficiency measurement enables: Targeted enablement reducing time-to-value, optimization of training budget allocation, identification of super users for peer learning, proof that skills development drives measurable ROI, and justification for change management investment.

Mistake 4: Inconsistent Measurement Practices

Each team measures AI differently. Marketing tracks one set of metrics. Sales uses another approach. IT has their own dashboard. Finance cannot consolidate or compare. Strategic decisions become impossible.

Inconsistent measurement creates: Inability to benchmark across departments or tools, conflicting claims about AI value and effectiveness, suboptimal budget allocation without comparable data, redundant measurement efforts wasting resources, and no unified view for executive decision making.

Establishing consistency requires: Standardized metrics framework across organization, centralized measurement platform and reporting, clear definitions and calculation methodologies, dashboards ensuring compliance with standards, and training for measurement practitioners.

Mistake 5: Measuring Too Late

Organizations deploy AI, then decide to measure ROI months later. By then, baseline data vanishes. Before-and-after comparison becomes impossible. ROI claims lack credibility.

Late measurement problems: No baseline for comparison means no proof of improvement. Memory and estimates replace actual data. Confounding factors obscure AI impact on outcomes. Skeptical stakeholders question unverifiable claims. And organizations cannot optimize because they lack a starting point.

The measurement timeline: Establish baseline metrics before AI deployment. Begin tracking immediately at launch. Monitor continuously through pilot and scaling. Report regularly on progress toward goals. And adjust measurement as understanding deepens.

The Measurement Maturity Path

Organizations evolve through predictable stages of AI ROI measurement maturity. Understanding this progression helps set realistic expectations and plan capability development.

Stage 1 - Ad Hoc Measurement

Inconsistent tracking driven by specific questions. Different teams measure differently. No standardized framework. Anecdotal evidence dominates discussions. Organizations cannot answer basic ROI questions confidently.

Stage 2 - Defined Measurement

Standardized metrics and definitions established. Measurement framework documented and communicated. Regular reporting cadence begins, but measurement is manual and resource-intensive. Data quality issues persist.

Stage 3 - Automated Measurement

Integrated measurement platform captures data automatically. Real-time dashboards available to stakeholders. Reduced manual effort enables broader coverage. Analytics capabilities emerge enabling deeper insights. Organizations begin optimizing based on measurement.

Stage 4 - Predictive Measurement

Advanced analytics predict ROI before investment. Machine learning identifies patterns across successful deployments. Framework guides proactive optimization decisions. Measurement becomes a competitive advantage. Organizations demonstrate market-leading AI value capture.

Most organizations today operate at Stage 1 or early Stage 2. Progressing requires investment in measurement infrastructure, training, and cultural change. However, organizations demonstrating measurable productivity gains and financial returns secure continued investment. Those who cannot face budget cuts and strategic questioning from business leaders.

Measuring Framework Success

Key metrics proving measurement framework effectiveness across AI projects.

ROI and Accountability Metrics

-

Quantified productivity improvements with baseline comparison showing efficiency gains

-

Measurable cost savings and cost reduction through automation

-

Revenue impact attribution to AI usage and AI-powered initiatives

-

Clear ROI calculations by tool, use case, and department

-

Executive confidence in AI value measurable through continued investment and budget allocation

Investment Visibility Metrics

-

Cost per productive outcome calculated and tracked across AI systems

-

Investment efficiency improvements over time demonstrating financial impact

-

Spending allocation optimized based on measured returns and profitability

-

Forecast accuracy for AI budgets and value realization

-

Portfolio optimization reducing waste, increasing returns on AI investments

Productivity and Impact Metrics

-

Time savings quantified across organization from AI-driven workflows

-

Quality improvements measured and documented through data quality metrics

-

Output increases tracked by team and use case

-

Competitive advantage demonstrated through benchmarks comparing AI ROI to traditional ROI

-

Business outcome achievement rates improving including customer experience, employee retention, and operational efficiency

Organizations implementing comprehensive ROI measurement frameworks demonstrate clearer value communication than those using adoption metrics alone. This clarity secures continued investment for AI initiatives, accelerates budget approvals from stakeholders, and enables data-driven optimization of AI solutions. The framework proves both short-term wins and long-term value creation from implementing AI.

Frequently Asked Questions

How do you measure AI ROI when productivity is hard to quantify?

Start with time savings, which is the most measurable productivity metric for AI tools. Track task completion time before and after AI implementation to establish clear baseline performance. Layer in quality improvements, output increases, and business outcomes. For knowledge work, measure tasks automated (hours saved through automation), research time reduced, content production increased, and decision speed improved. Combine quantitative metrics with business outcomes including customer satisfaction and employee productivity. Organizations typically find multiple measurable productivity indicators per use case when they systematically track outcomes using measurement frameworks.

What is the difference between AI adoption metrics and AI ROI metrics?

Adoption metrics show who is using AI and how frequently, essentially measuring access and activity. AI ROI metrics show business value generated including productivity gains, cost savings, revenue impact, and efficiency gains. You can have 90% adoption of AI technologies with zero ROI if users are not generating measurable value through AI-powered workflows. In the accountability era, executives need ROI metrics proving productivity gains and financial returns, not adoption dashboards showing login frequency. Successful AI requires measuring business outcomes, not just tool usage.

How quickly should organizations see measurable AI ROI?

Early productivity wins like time savings and task automation should be measurable within 30 to 60 days of AI adoption. Significant business impact, such as deal velocity, customer experience improvements, and operational efficiency, typically manifests in 3 to 6 months. Strategic value, including competitive advantage and capability transformation, requires 6 to 12 months to measure meaningfully. Organizations with proficiency development programs and change management support see measurable ROI faster than those relying on organic skill development. The key is establishing baseline metrics before deployment and tracking continuously through real-time analytics.

Can you prove AI ROI without sophisticated data infrastructure?

Yes. Start simple and build sophistication over time for measuring AI ROI. Initial ROI measurement can use basic time tracking, before-and-after productivity comparison against baseline performance, and business outcome monitoring. A spreadsheet tracking hours saved per week per user proves value immediately. As measurement matures, layer in usage analytics, outcome correlation, and automated tracking of KPIs. Organizations proving initial ROI secure budget for sophisticated measurement frameworks. Do not let perfect measurement block good measurement. Focus on demonstrating financial impact and efficiency gains from AI implementation even with basic tools.

What AI productivity metrics matter most to CFOs and boards?

CFOs need three core key metrics:

-

ROI calculation showing total value generated versus investment demonstrating return on investment

-

Productivity improvement percentage with baseline comparison showing efficiency gains and employee productivity increases

-

Cost per productive outcome, revealing investment efficiency

Boards additionally want strategic metrics including competitive advantage quantification, capability development pace, and long-term value trajectory. Frame everything financially for stakeholders. "AI delivered $8M productivity value on $2M investment equals 4x ROI" resonates more than "Users love it and adoption is high." Business leaders need proof of bottom line impact and profitability improvements.

How do you measure AI ROI across different departments with different use cases?

Establish universal value metrics like time saved, cost reduced, and output increased while allowing department-specific measures.

-

Marketing measures content production, campaign velocity, and conversion rates using AI-powered tools.

-

Sales tracks deal preparation time, win rates, and customer lifetime value improvements.

-

Finance monitors close cycle speed, accuracy, and costs.

-

IT measures support ticket resolution and operational efficiency such as AI-powered chatbots for help-desk support.

-

Supply chain teams track forecasting accuracy and inventory optimization.

Each department needs key metrics connecting AI usage to business outcomes. Aggregate to organizational ROI using financial normalization by converting all productivity gains and efficiency gains to dollar values for total impact calculation demonstrating business value across all AI projects.

What is the ROI of implementing comprehensive AI measurement frameworks?

Organizations see strong ROI on measurement infrastructure investment through improved decision-making processes, accelerated proficiency development, secured continued investment through demonstrated value versus competitors facing budget cuts, and optimization opportunities. Investment in measurement frameworks pays for itself, while enabling value optimization of AI solutions and AI-driven workflows.

The intangible benefit often matters more: confident decision-making about the largest workforce investment in business history. According to the Larridin State of Enterprise AI 2025 Report, with 72% of AI investments destroying value through waste, measurement transforms AI from faith-based investment to data-driven competitive advantage. Successful AI implementation requires measuring business objectives achievement, demonstrating financial returns, and proving impact on the bottom line through efficiency gains, cost reduction, and productivity improvements.

About Larridin

Larridin is the AI Measurement Company. We measure AI utilization, proficiency, and business value across your entire enterprise so you can turn AI chaos into competitive advantage.

Larridin Scout discovers your complete AI landscape in days, measuring what your AI investments actually deliver: utilization, proficiency, and business impact across your organization. Transform AI spending into measurable ROI with comprehensive analytics showing productivity gains, cost savings, and operational efficiency improvements.

Learn more about Scout.

Jan 29, 2026