Publish date: 12/09/2025

Enterprise AI spending will reach $644 billion in 2025, yet half of organizations can't answer a simple question: what AI tools are your teams actually using? Without strategic policy management, this blind spot turns innovation investments into compliance liabilities and competitive risk.

Key Takeaway

Enterprise AI success demands comprehensive policy management that balances innovation with governance. Organizations that implement strategic AI usage policies and compliance frameworks gain a measurable competitive advantage while maintaining regulatory readiness and operational control across their AI ecosystem.

Key Terms

-

AI Policy Management: The systematic approach to creating, implementing, and maintaining governance frameworks for artificial intelligence use across an organization. This includes defining acceptable AI use cases, establishing approval workflows, setting risk management protocols, and ensuring regulatory compliance while enabling AI adoption.

-

AI Governance Framework: A structured system of principles, policies, and practices that guide responsible AI development and deployment. These frameworks establish accountability, transparency, and ethical AI practices while aligning AI initiatives with business objectives and regulatory requirements.

-

Compliance Frameworks: Structured approaches for ensuring AI systems meet legal, regulatory, and ethical standards throughout their lifecycle. These frameworks help organizations navigate evolving AI regulations including GDPR, the EU AI Act, NIST AI Risk Management Framework, and ISO standards.

Shadow AI: Unauthorized or unmonitored use of AI tools and technologies within an organization. Shadow AI creates significant governance blind spots and compliance risks, with nearly half of enterprises identifying it as their top AI governance challenge.

The Strategic Imperative for AI Policy Management

As organizations accelerate AI adoption across their operations, the need for comprehensive AI policy management has become a critical business imperative. The challenge facing C-suite executives is stark:

The Larridin State of Enterprise AI 2025 Report found that 49.57% of organizations identify Shadow AI and unauthorized tools as their top governance challenge. Gartner predicts that by 2026, 50% of governments worldwide will enforce responsible AI use through regulations and data privacy requirements.

The financial stakes are equally compelling. By 2027, fragmented AI regulation will cover half the world's economies, driving $5 billion in compliance investment. Organizations without strategic policy management face not just regulatory exposure, but competitive disadvantage as AI-driven decision-making becomes central to business operations.

Navigating the Evolving Compliance Framework Landscape

Enterprise AI policy management must address a complex web of regulatory frameworks, each with distinct requirements and timelines. Understanding this landscape is essential for CAIOs, CFOs, CIOs, and COOs developing comprehensive governance strategies.

Key Regulatory Frameworks

The EU AI Act is the “the first comprehensive regulation on AI by a major regulator anywhere,” and full enforcement by 2026 is expected. The Act classifies AI systems by risk level: unacceptable risk systems are prohibited, high-risk applications face strict governance requirements, while limited or minimal risk systems require transparency disclosures. Non-compliance has substantial penalties, with potential fines reaching €35 million or 7 percent of global revenue.

In the United States, the NIST AI Risk Management Framework provides voluntary but widely adopted guidance for managing AI risks. The framework emphasizes four core functions: govern (establishing organizational policies), map (understanding AI contexts), measure (assessing system reliability and bias), and manage (implementing controls). NIST AI RMF has become the de facto standard for many organizations seeking to establish responsible AI practices.

Data privacy regulations including GDPR have strict requirements for AI systems that process personal data. Organizations must ensure transparency in automated decision-making, maintain lawful data processing practices, and provide individuals with control over their data. These requirements directly impact how AI technologies utilize customer information and generate outputs.

Essential Components of Strategic AI Policy Management

Effective AI governance frameworks integrate multiple components for comprehensive oversight while enabling innovation. Organizations must address technical, operational, and ethical dimensions simultaneously.

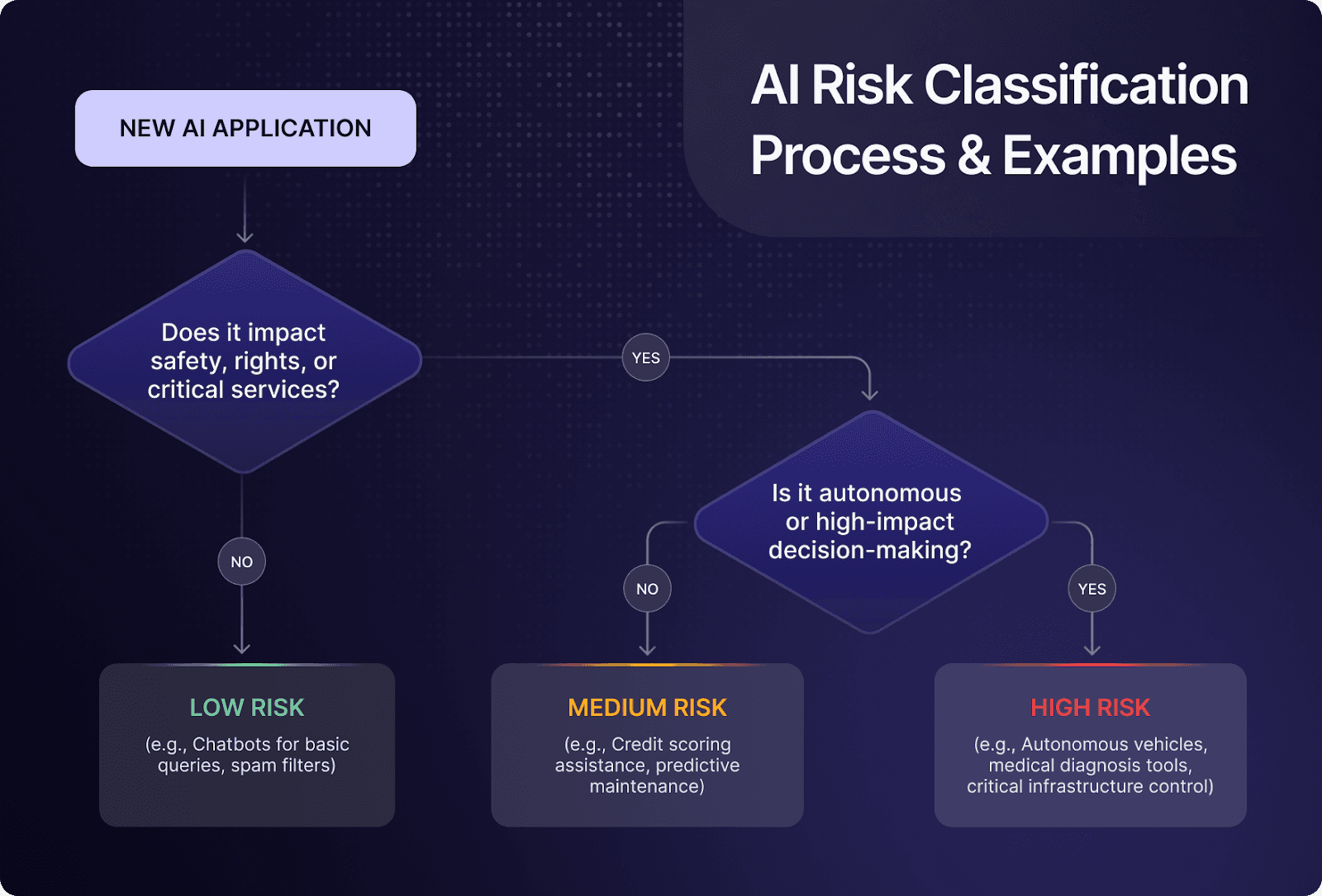

Risk Assessment and Classification

Risk management begins with systematic classification of AI use cases and applications. Organizations should maintain comprehensive inventories of all AI systems, documenting their purposes, data sources, decision-making capabilities, and affected populations. This visibility enables data-driven risk assessments and strategic infrastructure planning while identifying cybersecurity risks and compliance gaps proactively.

High-risk AI applications in sectors like healthcare, employment, and financial services require enhanced oversight. These systems need thorough impact assessments before deployment, regular validation for fairness and bias, and clear procedures for human review of automated decisions.

Data Governance and Privacy

Robust data governance mechanisms safeguard data privacy and enhance transparency across the AI lifecycle. Organizations must establish adaptive policies for data collection, processing, and storage that align with regulatory requirements while maintaining operational efficiency.

Privacy regulations require organizations to collect only data necessary for specific purposes. AI systems must be designed to minimize data collection and use information solely for intended applications to reduce potential risks and build stakeholder trust.

Explainability and Transparency

Transparency requirements extend beyond technical documentation to user-facing explanations. AI governance frameworks emphasize transparency about AI decisions and operations. Organizations must maintain transparent documentation of model inputs and decision logic while ensuring AI outputs remain explainable to relevant stakeholders.

Overcoming AI Governance Implementation Challenges

Despite widespread recognition of AI governance importance, implementation remains challenging. Only 18% of organizations have an artificial intelligence council authorized to make decisions on responsible AI governance, highlighting the urgent need for structured oversight.

Organizational Fragmentation

AI governance suffers when ownership fragments across legal, compliance, technology, and business functions. Effective policy management requires cross-functional collaboration with clear lines of accountability. Organizations should establish governance structures that bridge organizational silos and enable coordinated decision-making on AI initiatives.

Continuous Monitoring and Adaptation

AI systems require ongoing oversight rather than point-in-time assessments. Implementing automated tools and frameworks enables real-time oversight of AI systems through testing and evaluation, compliance dashboards, and cybersecurity monitoring. Continuous education on AI risks and compliance ensures teams can navigate the rapidly evolving landscape effectively.

Building Strategic AI Governance Capability

Organizations that treat AI governance as a strategic capability rather than a compliance burden gain competitive advantage through faster, more confident AI deployment. The foundation begins with comprehensive usage analytics that reveal actual AI adoption patterns across the enterprise.

Understanding what AI tools teams use, how effectively they leverage AI technologies, and where shadow AI creates governance blind spots enables data-driven policy development. This intelligence allows organizations to balance AI innovation acceleration with appropriate governance and cybersecurity requirements.

Strategic AI policy management connects usage analytics with adoption acceleration. Organizations can systematically scale successful AI practices enterprise-wide through secure, governed access to approved models and tools. Centralized AI infrastructure that enables rather than restricts innovation becomes possible when built on comprehensive usage intelligence.

Future-Proofing Your AI Governance Strategy

The regulatory environment will continue accelerating, demanding traceability, resilience, and transparency at levels few organizations have achieved. By 2027, Gartner expects three out of four AI platforms to include built-in tools for responsible AI and oversight, making governance capabilities a competitive differentiator.

Organizations should develop AI governance frameworks that map their AI portfolio to evolving regulatory requirements across different jurisdictions. This includes implementing AI trust, risk, and security management controls that reduce inaccurate or illegitimate information leading to faulty decision-making.

Vendor accountability forms another critical element. Organizations must enforce contractual obligations for responsible AI governance with third-party providers, mitigating risks from unethical or noncompliant outcomes. Many compliance failures occur through external AI tools rather than internal systems, making vendor evaluation processes essential.

Moving from Compliance to Competitive Advantage

AI usage policies and compliance frameworks are far more than regulatory obligations. They establish the foundation for confident, accelerated AI adoption that delivers measurable business value. Organizations that embed governance into AI workflows from the start avoid the costly retrofitting required when compliance becomes an afterthought.

The organizations thriving in the AI era are those turning compliance into operational capability: connected, explainable, and verifiable at any time. This transformation requires comprehensive usage intelligence, systematic governance structures, and continuous adaptation as AI technologies and regulations evolve.

Strategic AI policy management positions enterprises to harness artificial intelligence responsibly and effectively, transforming AI from scattered experiments into coordinated competitive advantage.

Frequently Asked Questions

What is the difference between AI governance and AI policy management?

AI governance is the overarching framework of principles and practices that guide responsible AI use across your organization. AI policy management is the operational execution of that governance: the systematic process of creating, implementing, and maintaining specific policies, approval workflows, and compliance procedures. Think of governance as your strategy and policy management as the tactical implementation that makes that strategy actionable.

How do we start building an AI policy framework when we don't know what AI tools our teams are using?

Begin with comprehensive AI usage analytics to discover your complete AI landscape, including shadow AI. Deploy monitoring tools that reveal what AI technologies teams use, how frequently, and for what purposes. This visibility provides the foundation for evidence-based policy development. Without understanding current adoption patterns, policies risk being either too restrictive (hindering innovation) or too permissive (creating compliance gaps).

What are the most critical compliance frameworks we need to address for enterprise AI?

The three primary frameworks are: (1) The EU AI Act, which classifies systems by risk level with enforcement beginning in 2026, (2) GDPR for data protection and privacy requirements when AI systems process personal data, and (3) NIST AI Risk Management Framework, which provides voluntary but widely adopted guidance across four functions: govern, map, measure, and manage. Organizations should also monitor sector-specific regulations in healthcare, financial services, and employment.

How can we balance AI innovation speed with compliance requirements?

Strategic AI policy management treats compliance as an enabler rather than a barrier. Establish pre-approved AI tools, model libraries, and use case frameworks that teams can access immediately within defined guardrails. This approach accelerates adoption of vetted solutions while maintaining control. Organizations with centralized governance and clear approval pathways deploy AI-powered solutions faster than those treating compliance as an afterthought requiring extensive retrofitting.

What's the biggest risk of not having formal AI usage policies?

Shadow AI—unauthorized or unmonitored AI tool adoption. Nearly 50% of organizations identify this as their top governance challenge. Shadow AI creates multiple risks: compliance violations you can't detect, data exposure through unapproved systems, inconsistent AI practices across departments, and inability to measure ROI on AI investments. By 2027, fragmented AI regulation will drive $5 billion in compliance investment, with unmonitored AI usage exposing organizations to substantial fines.

How often should AI policies be updated?

AI policies require continuous monitoring and quarterly reviews at minimum, with immediate updates when regulations change or new artificial intelligence advancements emerge. The regulatory landscape is evolving rapidly—generative AI capabilities, agentic AI systems, and new compliance frameworks demand adaptive policy management. Implement automated tools that enable real-time oversight and establish clear processes for policy amendments as your AI adoption matures.

Do we need different policies for different types of AI systems?

Yes. Risk-based policy frameworks should classify AI systems by their potential impact. High-risk applications in healthcare diagnostics, employment decisions, or financial services require enhanced oversight including impact assessments, bias validation, and human review procedures. Lower-risk applications like internal automation or content generation may operate under streamlined approval processes. This tiered approach optimizes resources while ensuring appropriate governance where it matters most.

Are you ready to transform your AI governance from a compliance burden into a strategic capability that accelerates innovation while maintaining control?

Jan 29, 2026